By Dr. Reza Chaji, Founder and CEO, VueReal

At Display Week 2025, one message was clear: artificial intelligence isn’t just enhancing user experiences—it’s redefining how humans and machines communicate. From automotive dashboards to wearables and industrial tools, displays are evolving from passive panels into intelligent interfaces that adapt, anticipate, and interact with users.

Microsoft’s keynote effectively captured this shift, describing AI as the engine of multimodal human-machine interfaces—systems that can see, hear, speak, and respond in real time. In my talk at the conference, I echoed many of the same themes. AI is driving foundational changes in hardware expectations, particularly in the realm of displays.

Displays are no longer static outputs. They are becoming dynamic inputs, deeply integrated with sensors, edge computing, and AI accelerators. This convergence is pushing display technologies into new territory — demanding greater brightness, efficiency, and intelligence at the pixel level.

At the conference, a growing consensus emerged that only one display technology is ready to meet these AI-driven demands: microLED.

From Unchanging Screens to Dynamic Decision Engines

As AI becomes more central to how we work, live, and interact, displays must evolve from static content presenters into intelligent, adaptable interfaces. Aparna Chennapragada, Chief Product Officer at Microsoft, emphasized during her SID 2024 keynote that the human brain processes visual information in as little as 13 milliseconds—approximately 60,000 times faster than text. With 30% of our brain’s cortex devoted to vision (versus 8% for touch and just 3% for hearing), the future of AI interfaces must be intensely visual and multimodal. Displays play a significant role.

Aparna introduced the concept of “model-forward” displays, where pixels are no longer passive but are generated and optimized by AI models. She proposed viewing brightness, resolution, and frame rate as part of a “token budget” — tunable dimensions that can adapt dynamically to the user’s needs. For instance, an elderly user might see a simplified, high-contrast interface, while a power user sees a data-dense dashboard — all for the same task.

This philosophy suggests a profound shift in display design: from universal design to displays that can be personalized and enable AI-generated visuals that are optimized in real-time.

MicroLED Enabling Human-Centered AI Through Hardware:

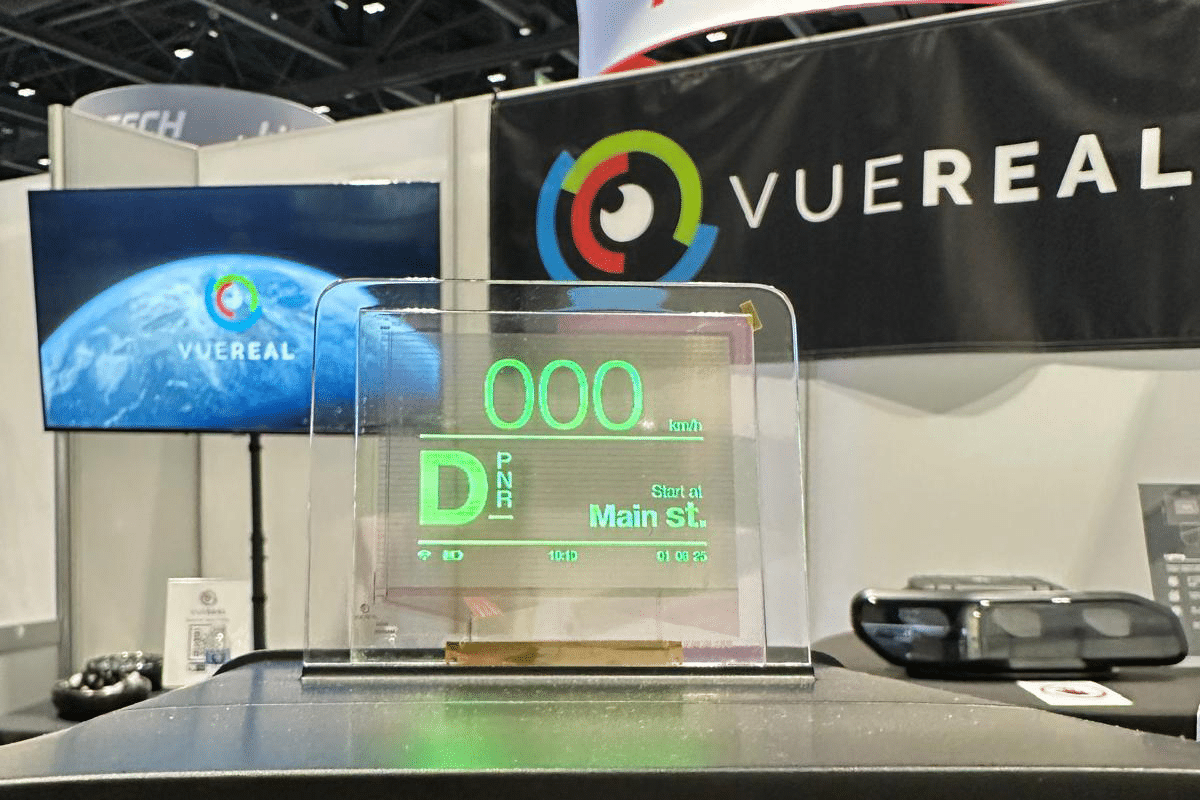

Reinforcing these conceptual shifts, VueReal presented the hardware blueprint to bring such intelligent interfaces to life. Human-machine interfaces (HMIs) must be non-intrusive, highly adaptive, and seamlessly integrated into their environment — particularly in autonomous systems, AR/VR devices, and wearables.

VueReal proposes that displays become true extensions of AI’s decision-making by integrating the following:

1. Transparent and Multi-Sensing Displays

VueReal’s microLEDs achieve over 85% transparency while maintaining high brightness — making them ideal for heads-up displays (HUDs), smart glass, and augmented reality. These displays can simultaneously visualize AI outputs and collect environmental or biometric data via integrated microsensors.

2. Dynamic and Context-Aware Interfaces

In automotive and AR scenarios, VueReal emphasizes the need for displays that adjust content and modality in real time depending on lighting conditions, user behavior, and environmental context.

3. High Pixel Efficiency and Customization

Leveraging shared pixel circuits and micro driver architectures, VueReal demonstrates how displays can achieve high transparency and low power consumption, even at high resolutions, enabling token-efficient visual delivery as envisioned by Chennapragada.

Toward a Model-Forward Display Architecture

By aligning Aparna’s software-centric “model-forward” vision with VueReal’s microLED-based HMI architecture, a new generation of intelligent displays becomes possible — where each pixel is dynamically optimized, task-aware, and user-specific.

Instead of fixed resolutions and layouts, displays can now:

- Adapt their complexity and energy use based on user profile and task.

- Deliver sensory-aware visuals that match context and cognitive load.

- Function as both input and output, integrating vision, gesture, and biometrics.

This redefines displays from passive endpoints to real-time AI collaborators, making the interface truly human-centered.

Rethinking MicroLED Manufacturing for the AI Age

The display industry is at an inflection point. As AI drives demand for adaptive, human-centered interfaces, it is increasingly evident that microLEDs cannot and should not follow the same industrialization path as LCD or OLED technologies.

Historically, OLED and LCD technologies have relied on highly centralized, capital-intensive mega fabs optimized for the massive production of largely standardized products. While this model enabled cost efficiencies for uniform consumer goods, it inherently limits flexibility, slows innovation, and is poorly suited for the era of intelligent, personalized displays.

In contrast, the microLED revolution must be rooted in agile, decentralized fabrication ecosystems. The diversity of AI-era applications — from automotive head-up displays (HUDs) and wearable health trackers to augmented reality (AR) glasses and smart surfaces — demands customized form factors, varying resolutions, diverse substrate integrations, and rapid design iteration cycles.

The Case for MicroSolid Printing

VueReal’s MicroSolid Printing platform is specifically designed to meet these next-generation demands. Rather than centralizing production into massive factories, this technology enables low- to mid-volume fabrication in modular, distributed facilities. This approach unlocks several key advantages:

- Rapid Customization: Each production node can be optimized for specific use cases — automotive, medical, or industrial — allowing for quicker adaptation to evolving customer requirements.

- Scalable Innovation: Smaller, localized facilities lower the barrier to entry for new product development, enabling startups, OEMs, and research labs to participate in and accelerate display innovation.

- Cost Efficiency Without Scale Dependence: Unlike OLED fabs that rely on economies of scale, MicroSolid Printing delivers cost-effective production even at lower volumes, thanks to material efficiency, reduced waste, and simplified equipment.

- Localized Supply Chains: A decentralized model reduces reliance on single-region megafabs, enhancing resilience and supporting local economic development.

Formed Ecosystem: The Key Enabler

To fully realize this vision, VueReal is fostering a “Formed Ecosystem” — a collaborative platform comprising tool developers, material suppliers, integrators, and product designers, all aligned around MicroSolid Printing. This ecosystem enables:

- Standardized yet flexible design toolkits

- Seamless integration into diverse applications

- Rapid prototyping and iteration cycles

- Shared innovation infrastructure across geographies

This year’s Display Week 2025 did not disappoint. With the industry’s brightest minds unveiling groundbreaking innovations and setting bold new trends, the future of display technology has never looked more exciting.